Family wellness assistant

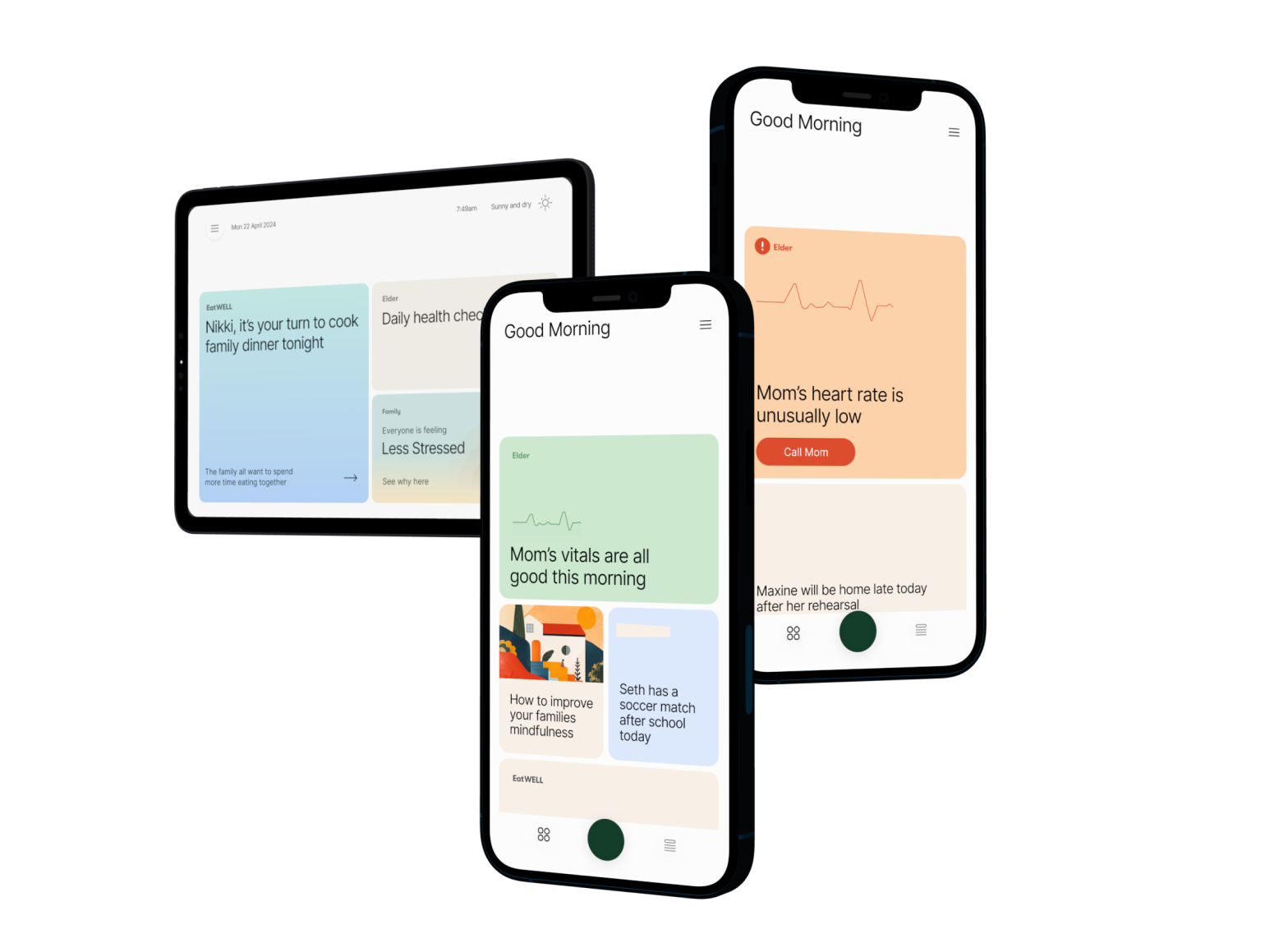

An AI family assistant that turns wellness from 'one more thing to do' into shared routines families can actually stick to.

Most apps are single-player first. When it comes to wellness of families, it's a multiplayer game.

At a glance

Client: Global consumer electronics and connected-home company

Format: 12 weeks parallel design and prototyping engagement

My role: product and prototyping lead – agent UX, interaction model, prototyping & build, team alignment

Team: squad of 8 (me, 2 x product designers, 2 x service designers, 1x creative technologist, PM, client lead)

What shipped

Fully interactive vision prototype using real data

Product designs and service blueprints

Partnership concepts

The problem & solution

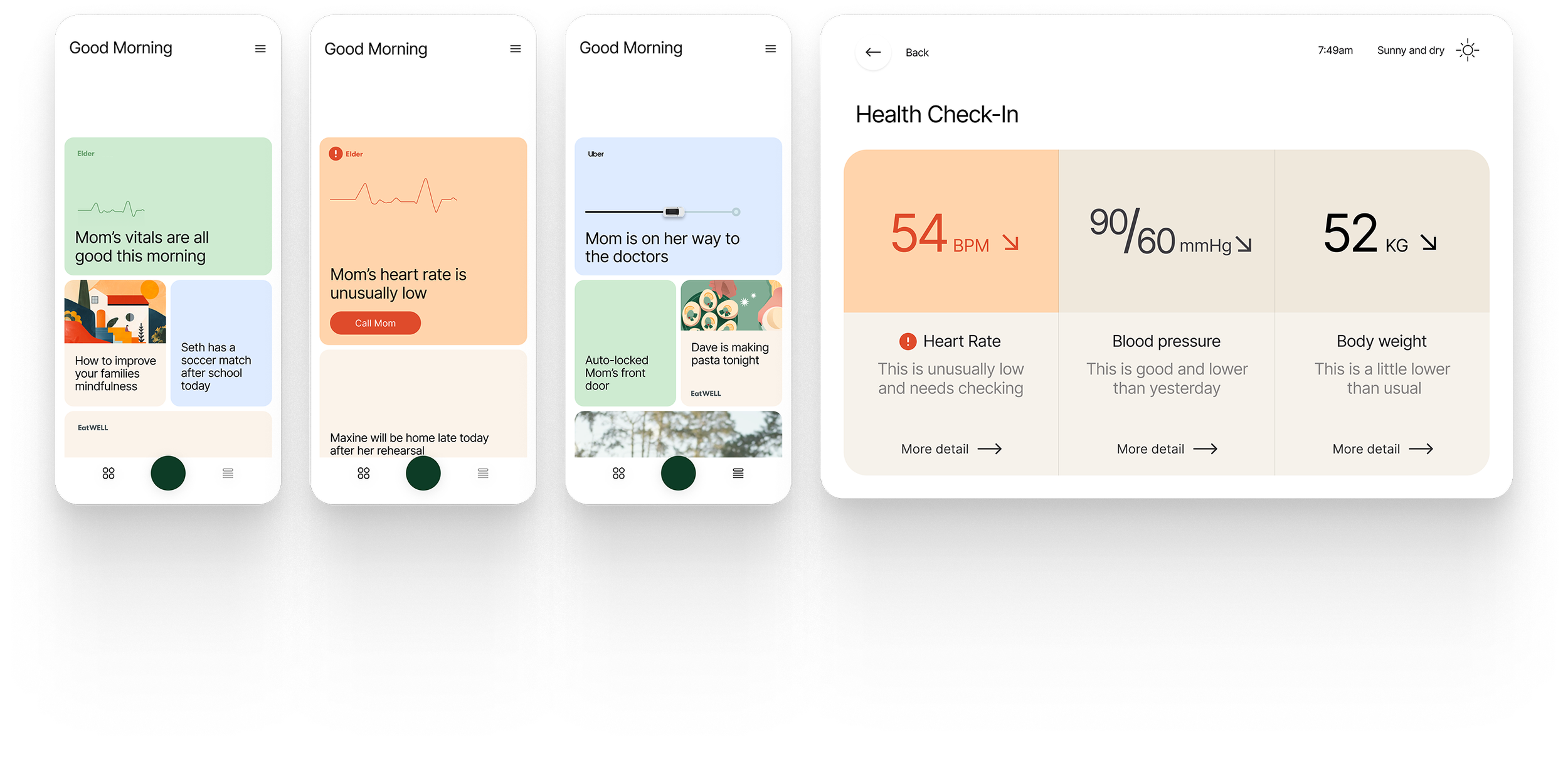

Modern family wellness is a "coordination problem": everyone has different needs, schedules, priorities – and parents are overloaded with decisions. Contextually aware Umi helps reduce that friction by acting as a central family wellness hub that brings together personalised AI recommendations, real-time data from wearables and home sensors, and coordinates actions across family members.

The key insight – "wellness is social"

This family assistant isn't a solo coach. One of the unique features is its multiplayer first approach to interacting with AI. It's not about an individual, it's about the family as a whole.

What we designed and built

Across the engagement we produced a suite of prototypes that progressed from quick experiments to a demo-ready vision prototype used for stakeholder demos and testing:

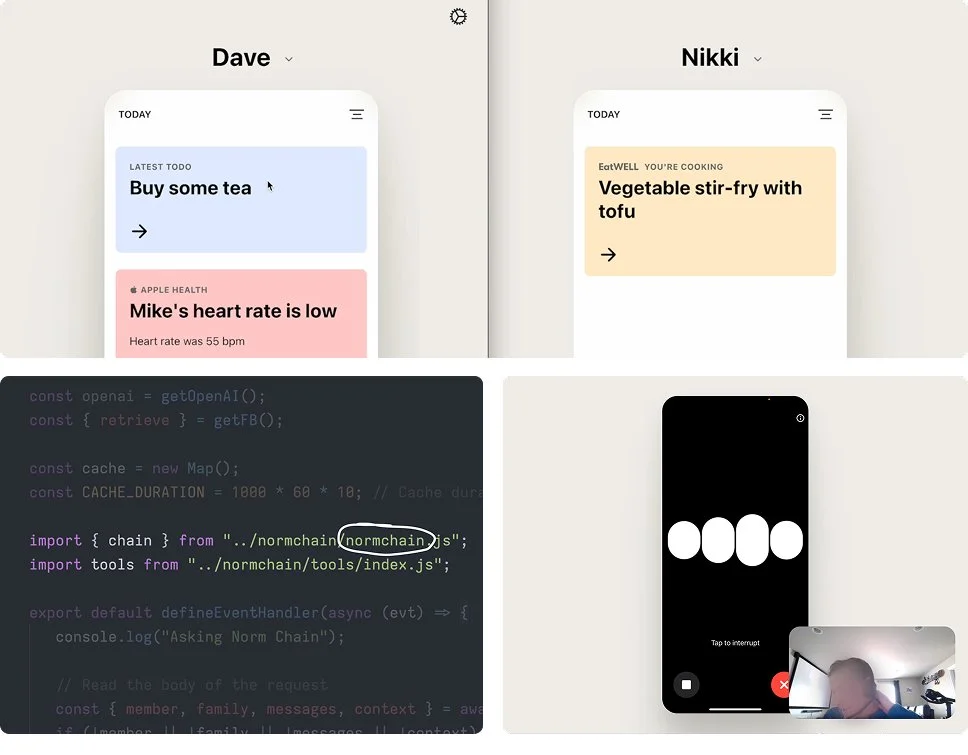

Prototype vignettes and experiments

Fast iterations to explore how an assistant could trigger real-world actions and adapt to context (e.g. lightweight voice agent tests, generative UI explorations, real-time data connections and inference) – used to validate feasibility and shape the interaction model.

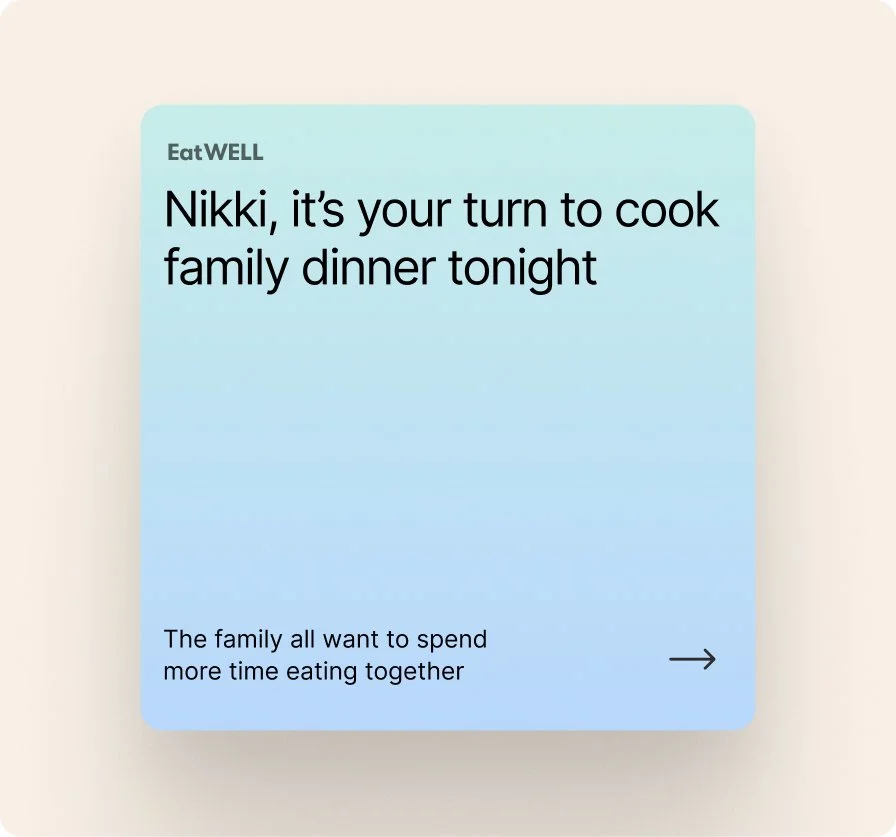

Visual and interaction prototypes

Clickable flows to test key moments, define the interaction model, and explore the look and feel of the mobile experience.

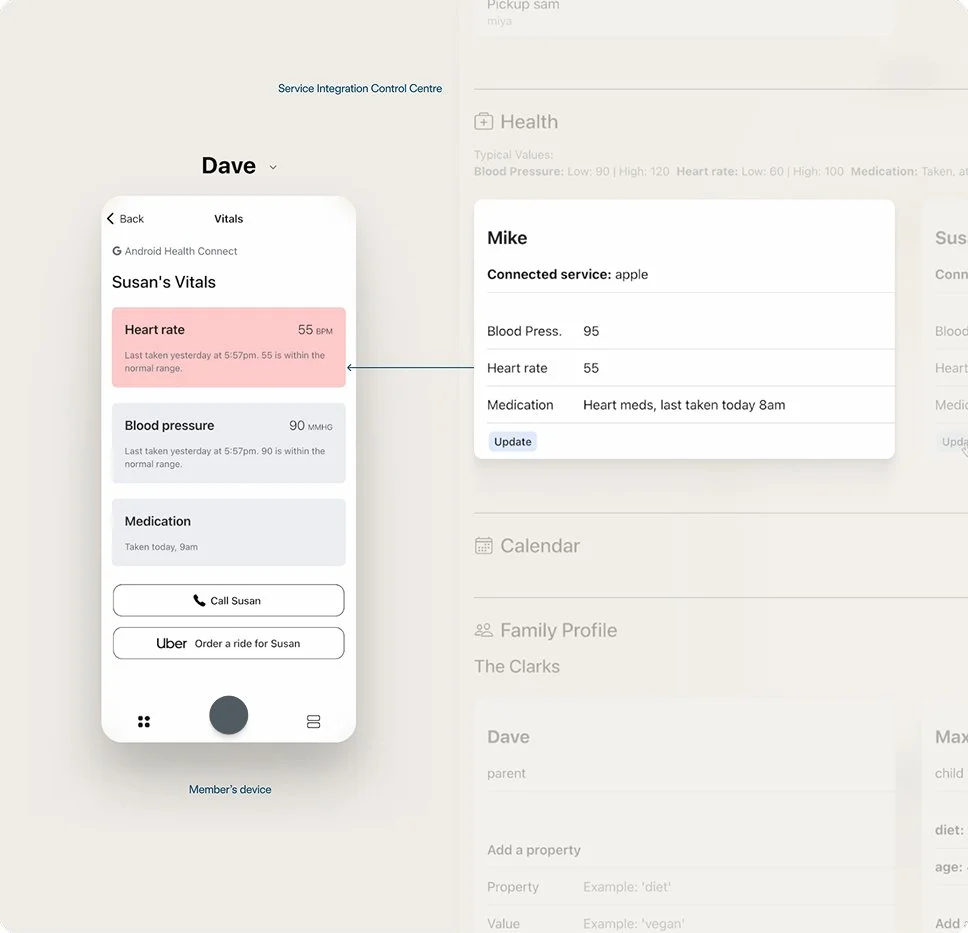

Real-time mobile prototype with back-end data console

A mobile prototype featuring a context-aware conversational assistant and a dynamically adjusting home screen, paired with a back-end console that let us edit underlying family data in real time.

This was the centrepiece because it made the assistant feel “alive” and credible, not scripted.

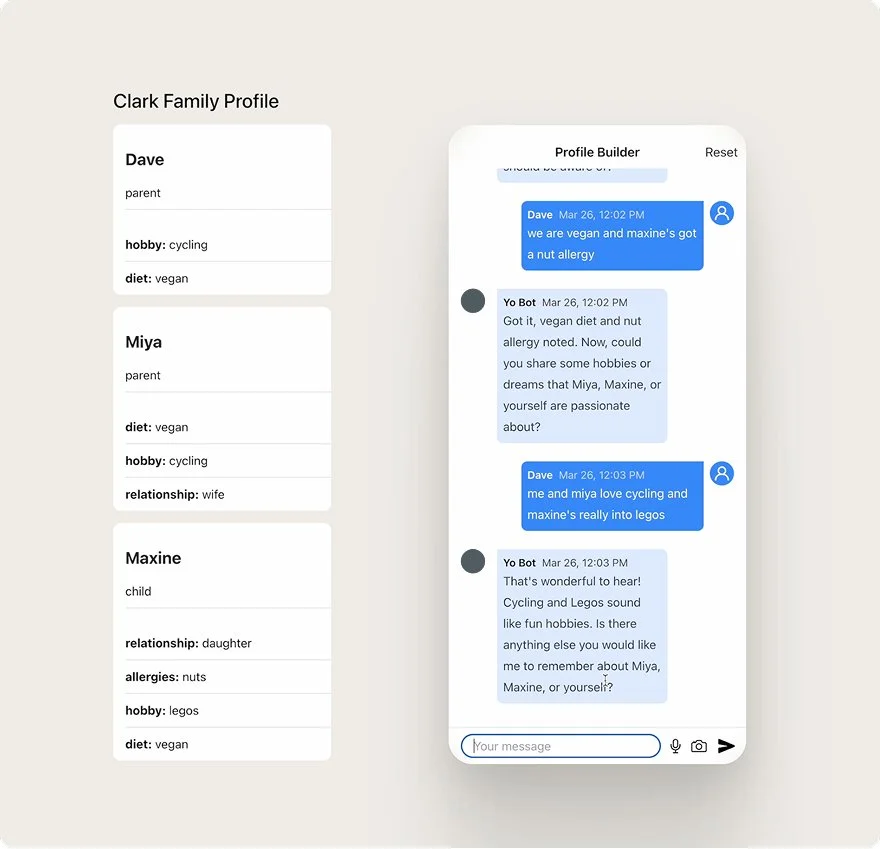

Conversational onboarding prototype (profile inference)

A focused prototype exploring how onboarding could happen through conversation – and how the system could infer and progressively enrich a user/family profile from what people say (instead of forcing form-filling).

Why build real-time prototypes instead of explaining the vision in slides?

A prototype is worth a thousand slides. In any room, people are asking different questions – how the product works, what the interaction model is, which services it integrates with, what the assistant can and can’t do, and how “real” the AI feels. A working prototype answers those questions simultaneously. Slides can’t.

Outcomes

Created a coherent vision prototype that demonstrated the core interaction model and multi-user + AI dynamics

De-risked the product direction by proving an early, interactive version of the experience for testing

Aligned a cross-disciplinary client team around a shared vision and a concrete set of partner integration concepts. Product launched at CES 2025

What I learned

Multi-user agents are coordination products first, AI products second.

Tone is a feature – it drives engagement as much as clever recommendation engines do.

A dashboard isn’t garnish – it’s how families build peace of mind, visibility, and shared momentum.

If you're exploring an AI assistant that has to work with real people, real constraints, and real stakes I'd love to chat about what we learned and how similar approaches might apply to your context.